What are our data sources?

We use the data sources on the side for ranking solutions and awarding badges in synthetic data generator category. Our data sources in synthetic data generator category include;

Data is the new oil and like oil, it is scarce and expensive. Companies rely on data to build machine learning models which can make predictions and improve operational decisions. When historical data is not available or when the available data is not sufficient because of lack of quality or diversity, companies rely on synthetic data to build models.

Synthetic data has been dramatically increasing in quality. And its quantity makes up for issues in quality. For example, most self-driving kms are accumulated with synthetic data produced in simulations.

If you’d like to learn about the ecosystem consisting of Synthetic Data Generator and others, feel free to check AIMultiple Data.

AIMultiple is data driven. Evaluate 20 services based on

comprehensive, transparent and objective AIMultiple scores.

For any of our scores, click the information icon to learn how it is

calculated based on objective data.

*Products with visit website buttons are sponsored

We use the data sources on the side for ranking solutions and awarding badges in synthetic data generator category. Our data sources in synthetic data generator category include;

review websites

social media websites

search engine data for branded queries

According to the weighted combination of 7 data sources

MDClone

Genrocket

BizDataX

MOSTLY AI

YData

Taking into account the latest metrics outlined below, these are the current synthetic data generator market leaders. Market leaders are not the overall leaders since market leadership doesn’t take into account growth rate.

Genrocket

MDClone

BizDataX

MOSTLY AI

YData

These are the number of queries on search engines which include the brand name of the solution. Compared to other Data categories, Synthetic Data Generator is more concentrated in terms of top 3 companies’ share of search queries. Top 3 companies receive 69%, 7% more than the average of search queries in this area.

4,408 employees work for a typical company in this solution category which is 4,387 more than the number of employees for a typical company in the average solution category.

In most cases, companies need at least 10 employees to serve other businesses with a proven tech product or service. 8 companies with >10 employees are offering synthetic data generator. Top 3 products are developed by companies with a total of 4k employees. The largest company building synthetic data generator is Informatica with more than 4,000 employees.

Taking into account the latest metrics outlined below, these are the fastest growing solutions:

MDClone

Genrocket

MOSTLY AI

BizDataX

YData

We have analyzed reviews published in the last months. These were published in 4 review platforms as well as vendor websites where the vendor had provided a testimonial from a client whom we could connect to a real person.

These solutions have the best combination of high ratings from reviews and number of reviews when we take into account all their recent reviews.

This data is collected from customer reviews for all Synthetic Data Generator companies. The most positive word describing Synthetic Data Generator is “Easy to use” that is used in 10% of the reviews. The most negative one is “Difficult” with which is used in 3.00% of all the Synthetic Data Generator reviews.

According to customer reviews, most common company size for synthetic data generator customers is 1,001+ employees. Customers with 1,001+ employees make up 45% of synthetic data generator customers. For an average Data solution, customers with 1,001+ employees make up 39% of total customers.

These scores are the average scores collected from customer reviews for all Synthetic Data Generators. Synthetic Data Generators is most positively evaluated in terms of "Customer Service" but falls behind in "Ease of Use".

This category was searched on average for 2.2k times per month on search engines in 2022. This number has increased to 2.4k in 2023. If we compare with other data solutions, a typical solution was searched 1.3k times in 2022 and this decreased to 1k in 2023.

There are 2 categories of approaches to synthetic data: modelling the observed data or modelling the real world phenomenon that outputs the observed data.

Modelling the observed data starts with automatically or manually identifying the relationships between different variables (e.g. education and wealth of customers) in the dataset. Based on these relationships, new data can be synthesized.

Simulation(i.e. Modelling the real world phenomenon) requires a strong understanding of the input output relationship in the real world phenomenon. A good example is self-driving cars: While we know the physical mechanics of driving and we can evaluate driving outcomes (e.g. time to destination, accidents), we still have not built machines that can drive like humans. As a result, we can feed data into simulation and generate synthetic data.

As expected, synthetic data can only be created in situations where the system or researcher can make inferences about the underlying data or process. Generating synthetic data on a domain where data is limited and relations between variables is unknown is likely to lead to a garbage in, garbage out situation and not create additional value.

Synthetic data enables data-driven, operational decision making in areas where it is not possible.

Any business function leveraging machine learning that is facing data availability issues can get benefit from synthetic data.

Any company leveraging machine learning that is facing data availability issues can get benefit from synthetic data.

Synthetic data is especially useful for emerging companies that lack a wide customer base and therefore significant amounts of market data. They can rely on synthetic data vendors to build better models than they can build with the available data they have. With better models, they can serve their customers like the established companies in the industry and grow their business.

Major use cases include:

Increasing reliance on deep learning and concerns regarding personal data create strong momentum for the industry. However, deep learning is not the only machine learning approach and humans are able to learn from much fewer observations than humans. Improved algorithms for learning from fewer instances can reduce the importance of synthetic data.

Synthetic data companies can create domain specific monopolies. In areas where data is distributed among numerous sources and where data is not deemed as critical by its owners, synthetic data companies can aggregate data, identify its properties and build a synthetic data business where competition will be scarce. Since quality of synthetic data also relies on the volume of data collected, a company can find itself in a positive feedback loop. As it aggregates more data, its synthetic data becomes more valuable, helping it bring in more customers, leading to more revenues and data.

Access to data and machine learning talent are key for synthetic data companies. While machine learning talent can be hired by companies with sufficient funding, exclusive access to data can be an enduring source of competitive advantage for synthetic data companies. To achieve this, synthetic data companies aim to work with a large number of customers and get the right to use their learnings from customer data in their models.

Please note that this does not involve storing data of their customers. Synthetic data companies build machine learning models to identify the important relationships in their customers' data so they can generate synthetic data. If their customers gives them the permission to store these models, then those models are as useful as having access to the underlying data until better models are built.

Synthetic data is any data that is not obtained by direct measurement. McGraw-Hill Dictionary of Scientific and Technical Terms provides a longer description: "any production data applicable to a given situation that are not obtained by direct measurement".

Synthetic data allow companies to build machine learning models and run simulations in situations where either

Specific integrations for are hard to define in synthetic data. Synthetic data companies need to be able to process data in various formats so they can have input data. Additionally, they need to have real time integration to their customers' systems if customers require real time data anonymization.

For deep learning, even in the best case, synthetic data can only be as good as observed data. Therefore, synthetic data should not be used in cases where observed data is not available.

Synthetic data can not be better than observed data since it is derived from a limited set of observed data. Any biases in observed data will be present in synthetic data and furthermore synthetic data generation process can introduce new biases to the data.

It is also important to use synthetic data for the specific machine learning application it was built for. It is not possible to generate a single set of synthetic data that is representative for any machine learning application. For example, this paper demonstrates that a leading clinical synthetic data generator, Synthea, produces data that is not representative in terms of complications after hip/knee replacement.

While computer scientists started developing methods for synthetic data in 1990s, synthetic data has become commercially important with the widespread commercialization of deep learning. Deep learning is data hungry and data availability is the biggest bottleneck in deep learning today, increasing the importance of synthetic data.

Deep learning has 3 non-labor related inputs: computing power, algorithms and data. Machine learning models have become embedded in commercial applications at an increasing rate in 2010s due to the falling costs of computing power, increasing availability of data and algorithms.

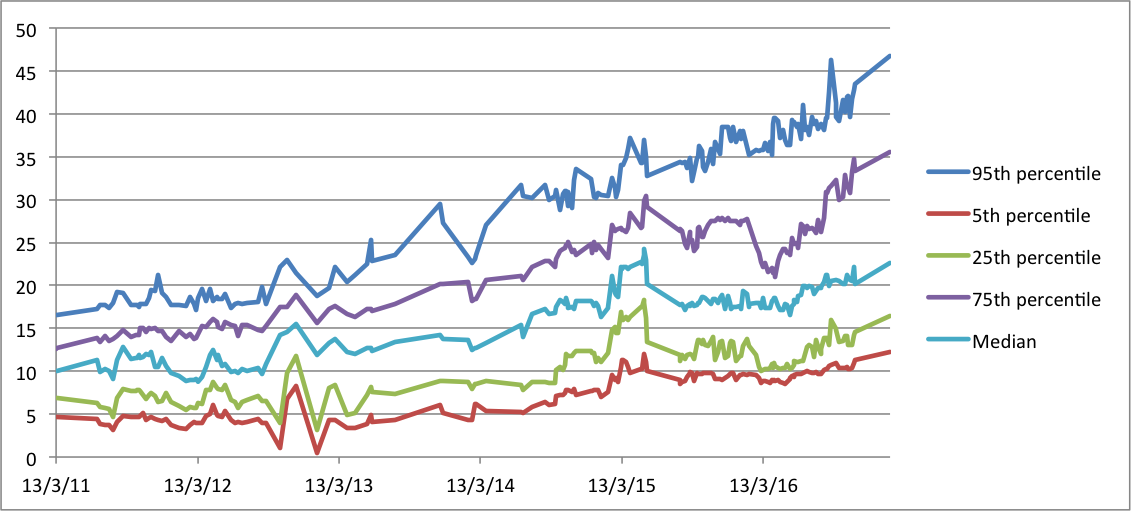

Figure:PassMark Software built a GPU benchmark with higher scores denoting higher performance. Figure includes GPU performance per dollar which is increasing over time

While algorithms and computing power are not domain specific and therefore available for all machine learning applications, data is unfortunately domain specific (e.g. you can not use customer purchasing behavior to label images). This makes data the bottleneck in machine learning.

Deep learning relies on large amounts of data and synthetic data enables machine learning where data is not available in the desired amounts and prohibitely expensive to generate by observation.

While data availability has increased in most domains, companies face a chicken and egg situation in domains like self-driving cars where data on the interaction of computer systems and the real world is scarce. Companies like Waymo solve this situation by having their algorithms drive billions of miles of simulated road conditions.

In other cases, a company may not have the right to process data for marketing purposes, for example in the case of personal data. Companies historically got around this by segmenting customers into granular sub-segments which can be analyzed. Some telecom companies were even calling groups of 2 as segments and using them to predict customer behaviour. However, General Data Protection Regulation (GDPR) has severely curtailed company's ability to use personal data without explicit customer permission. As a result, companies rely on synthetic data which follows all the relevant statistical properties of observed data without having any personally identifiable information. This allow companies to run detailed simulations and observe results at the level of a single user without relying on individual data.

Observed data is the most important alternative to synthetic data. Instead of relying on synthetic data, companies can work with other companies in their industry or data providers. Another alternative is to observe the data.

The only synthetic data specific factor to evaluate for a synthetic data vendor is the quality of the synthetic data. It is recommended to have a through PoC with leading vendors to analyze their synthetic data and use it in machine learning PoC applications and assess its usefulness.

Typical procurement best practices should be followed as usual to enable sustainability, price competitiveness and effectiveness of the solution to be deployed.

Wikipedia categorizes synthetic data as a subset of data anonymization. This is true only in the most generic sense of the term data anonimization. For example, companies like Waymo use synthetic data in simulations for self-driving cars. In this case, a computer simulation involves modelling all relevant aspects of driving and having a self-driving car software take control of the car in simulation to have more driving experience. While this indeed creates anonymized data, it can hardly be called data anonymization because the newly generated data is not directly based on observed data. It is only based on a simulation which was built using both programmer's logic and real life observations of driving.